Duration: 16 weeks

My Roles: UX researcher, UX designer

Duration: 16 weeks

My Roles: UX researcher, UX designer

In the spring of 2023, I took the course HCI Research Project in my master's program. Together with Eva-Maria Strumegger and Luzie Schroeter, we developed a prototype that guides users through generating effective prompts for AI-generated emotional images by examining HCI-related scientific literature, formulating a meaningful research question, conducting research through a workshop, developing a prototype based on our findings, and then testing and analyzing this prototype. Our work culminated in a scientific research paper we published in the Proceedings of the HCI Students Conference ’23 . Our paper can be read here.

Text-to-image generators have transformed the creative landscape by converting text descriptions into unique visual representations. However, text as an input modality presents challenges for users due to the need for precise instructions [1]. Conveying emotions through text-to-image prompts is particularly challenging [2], and research that has addressed user needs and the usability of such systems has been limited so far [3, 4, 5].

As my group researched ways to facilitate the creation of effective prompts, we came across the idea of structuring searches to produce prompts. Liu et al. [6], for instance, developed a structured search system called "Opal" to guide users through the generation of text-to-image prompts for news illustrations. The results of their study were striking:

“Users with [the structured search system] generated two times more usable results than users without.”

In contrast to Liu et al., we investigated the usefulness of different UI components that may be used for producing or editing text-generated images, and we were interested in emotionally rich images for mood boards rather than news illustrations.

After conducting research on related work, we proposed the following research question to guide us:

“What UI components might be useful for a structured search to generate emotional imagery for creative mood boarding using text-to-image generators?”

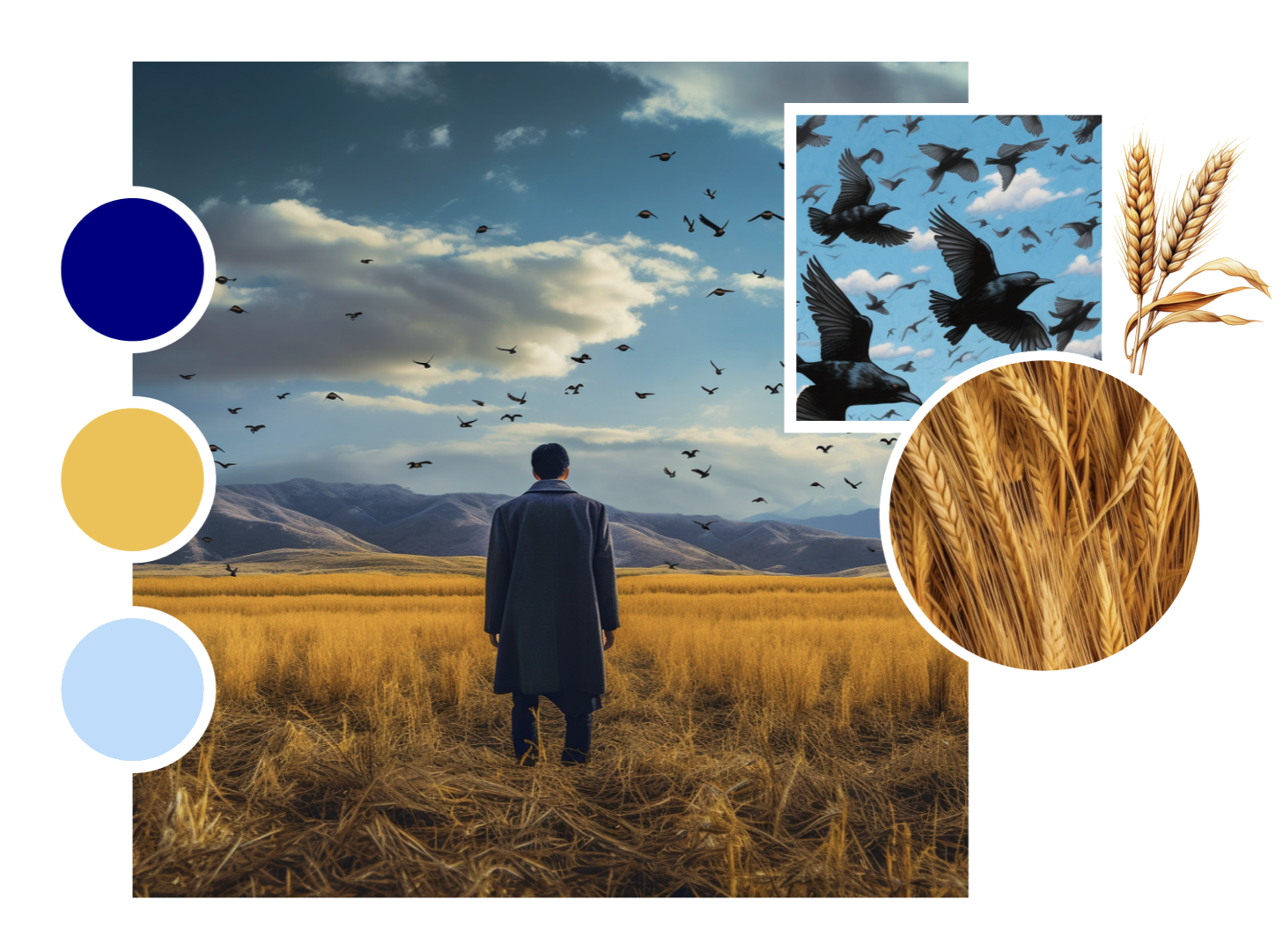

We had decided to focus on mood boards because they are a visual medium especially focused on communicating emotions [7, 8, 9], and there was a lack of research in this area.

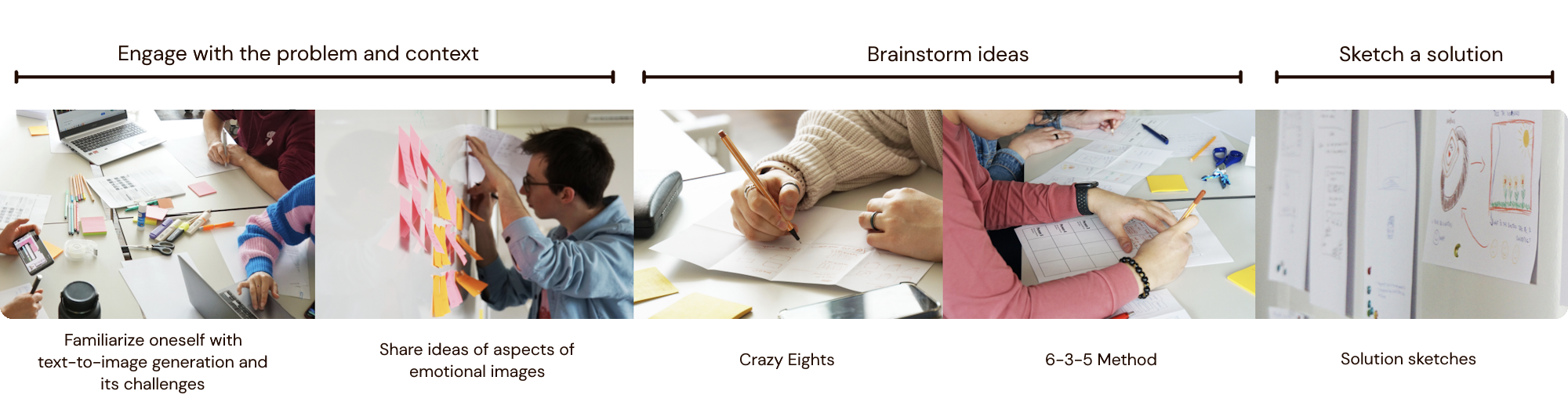

In order to address our research question, we conducted a co-design workshop to gather ideas on useful UI components. Our participants were Human-Computer Interaction students with previous experience in creating mood boards and using AI image generator tools. Inspired by Design Thinking methodology [10], our workshop was divided into the following phases:

The following themes emerged from our thematic analysis of participants' input:

1. Context and depicted elements are the basis of emotional images

2. Ambience contributes to images being perceived as emotional

3. Colors convey emotions

4. Objects or subjects that express feelings make an image emotional

5. An emotional image tells a story

6. Personal experiences influence how we perceive emotional images

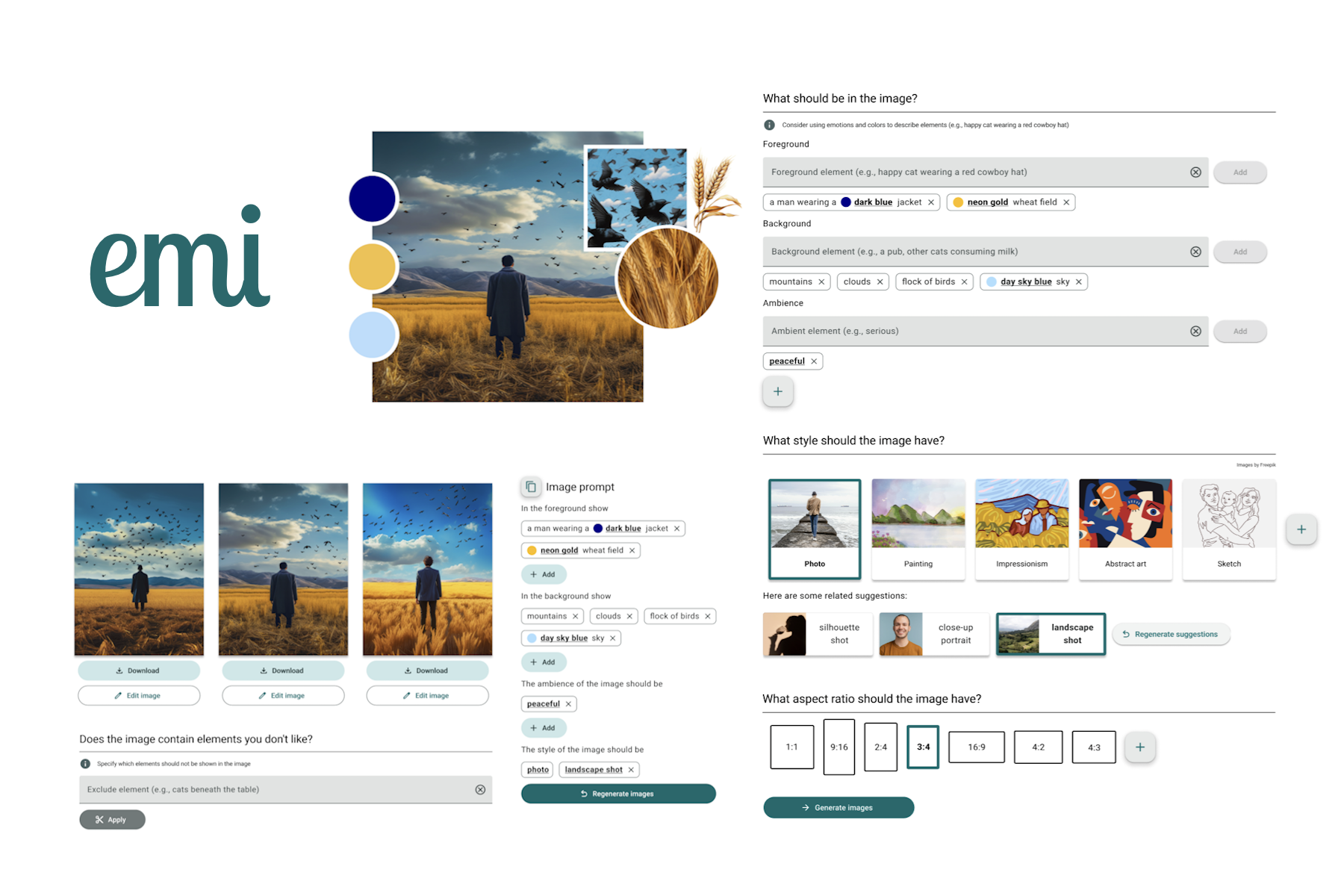

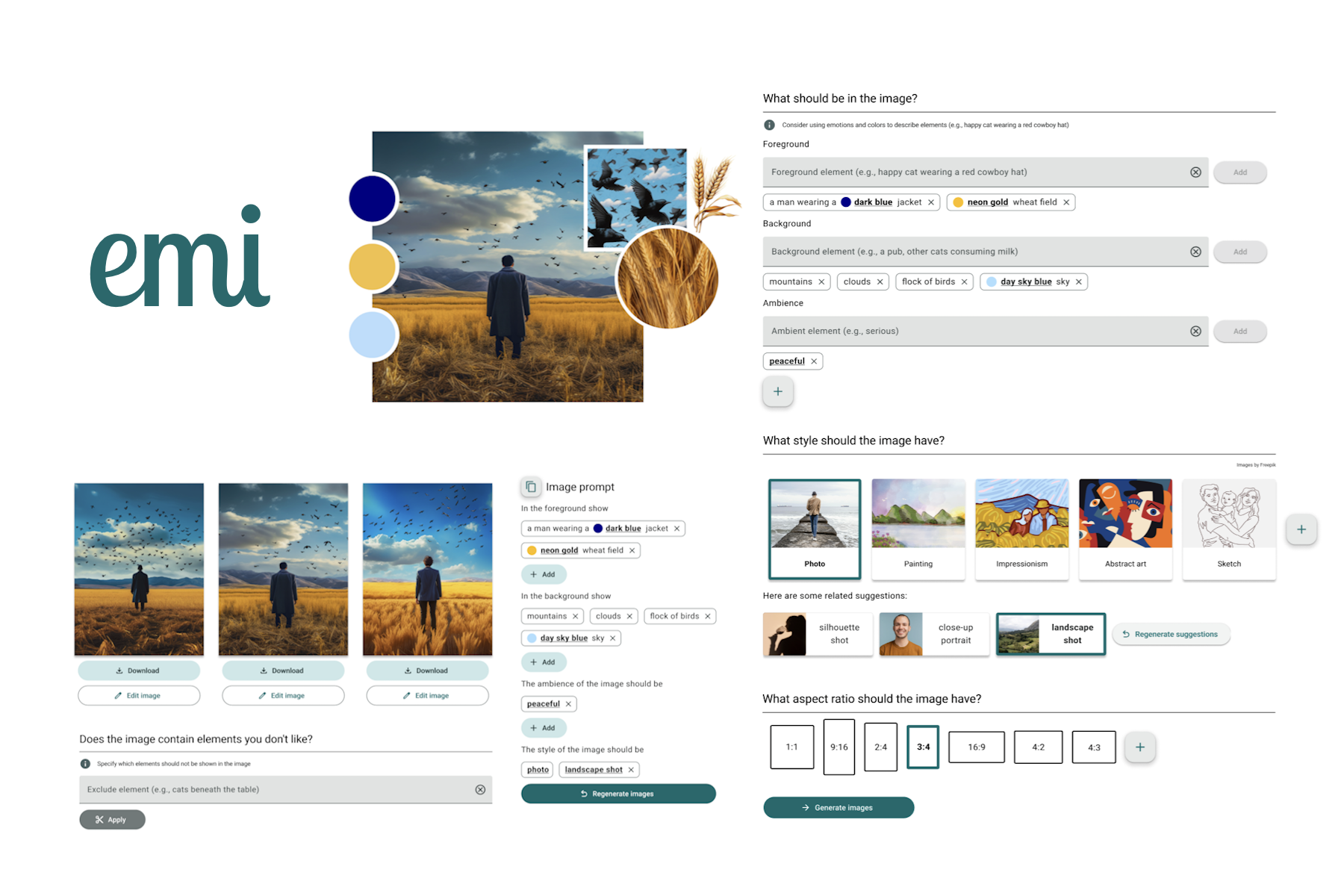

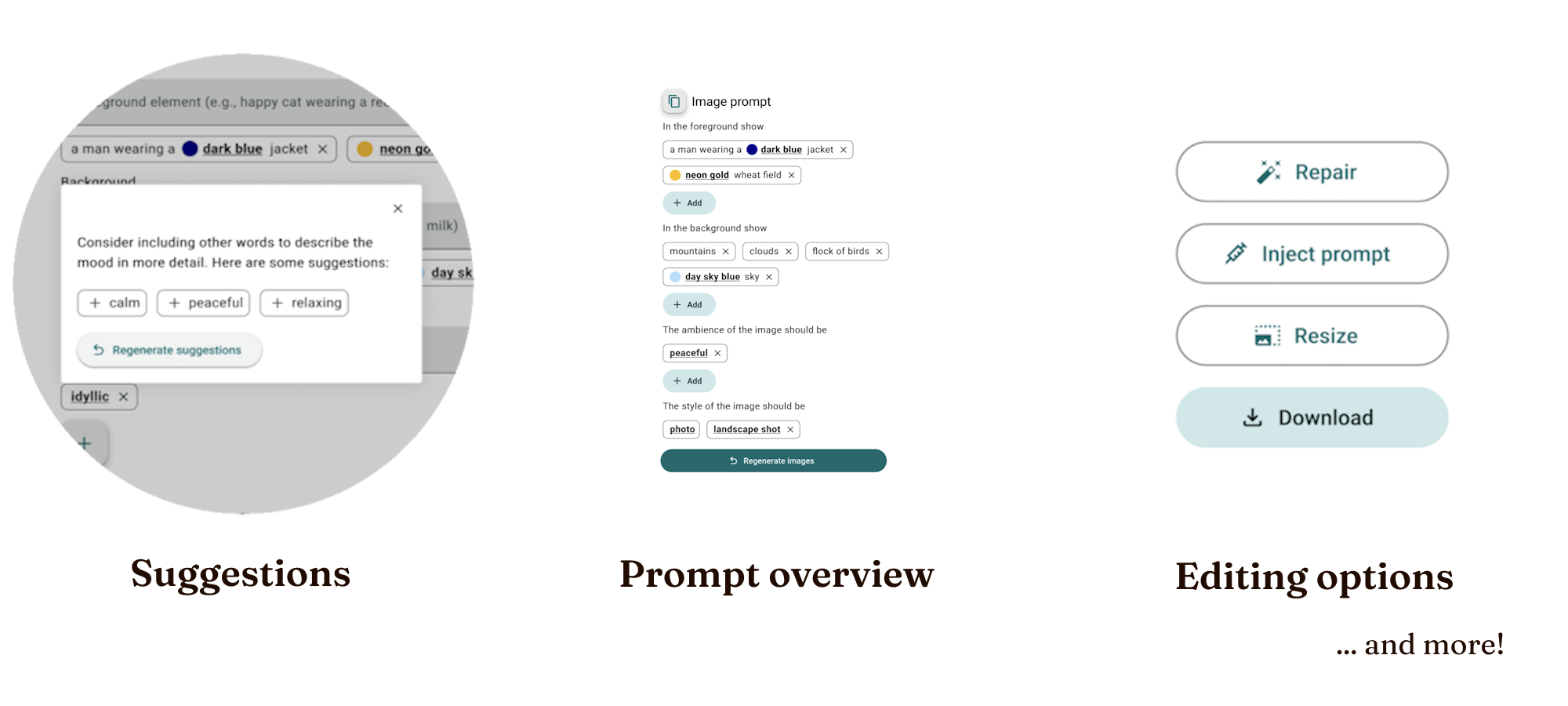

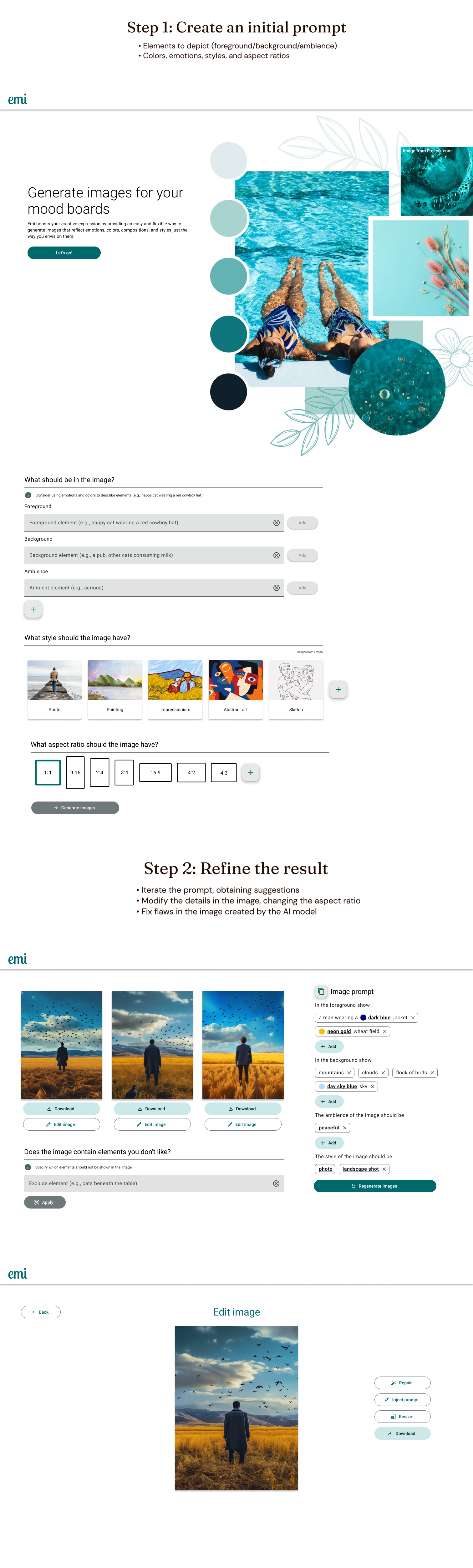

Our scenario-based clickable prototype, called "Emi," was based on the insights gained from the co-design workshop as well as the design guidelines for prompt engineering for generative text-to-image models developed by Liu and Chilton. It was divided into the following two steps:

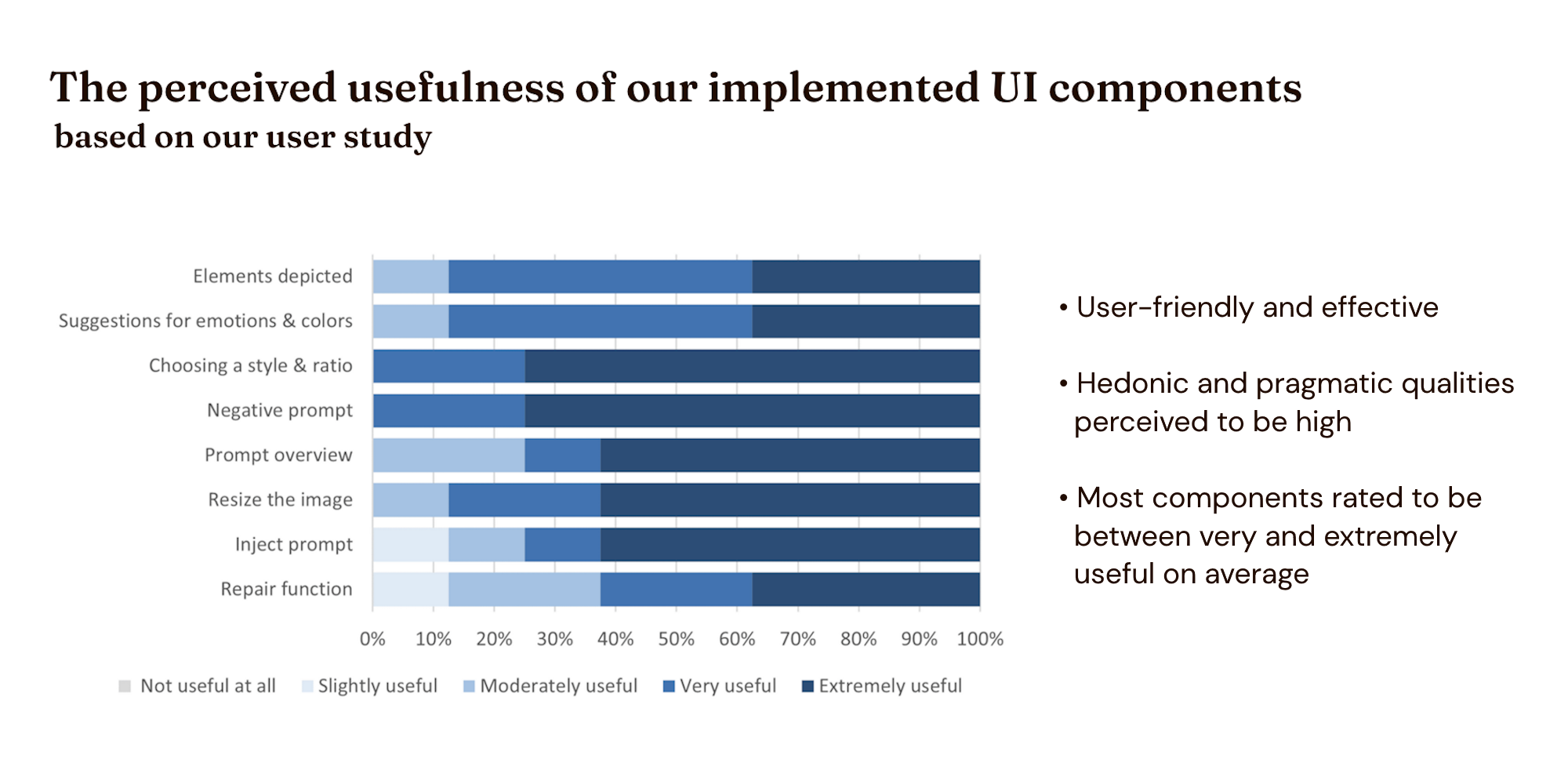

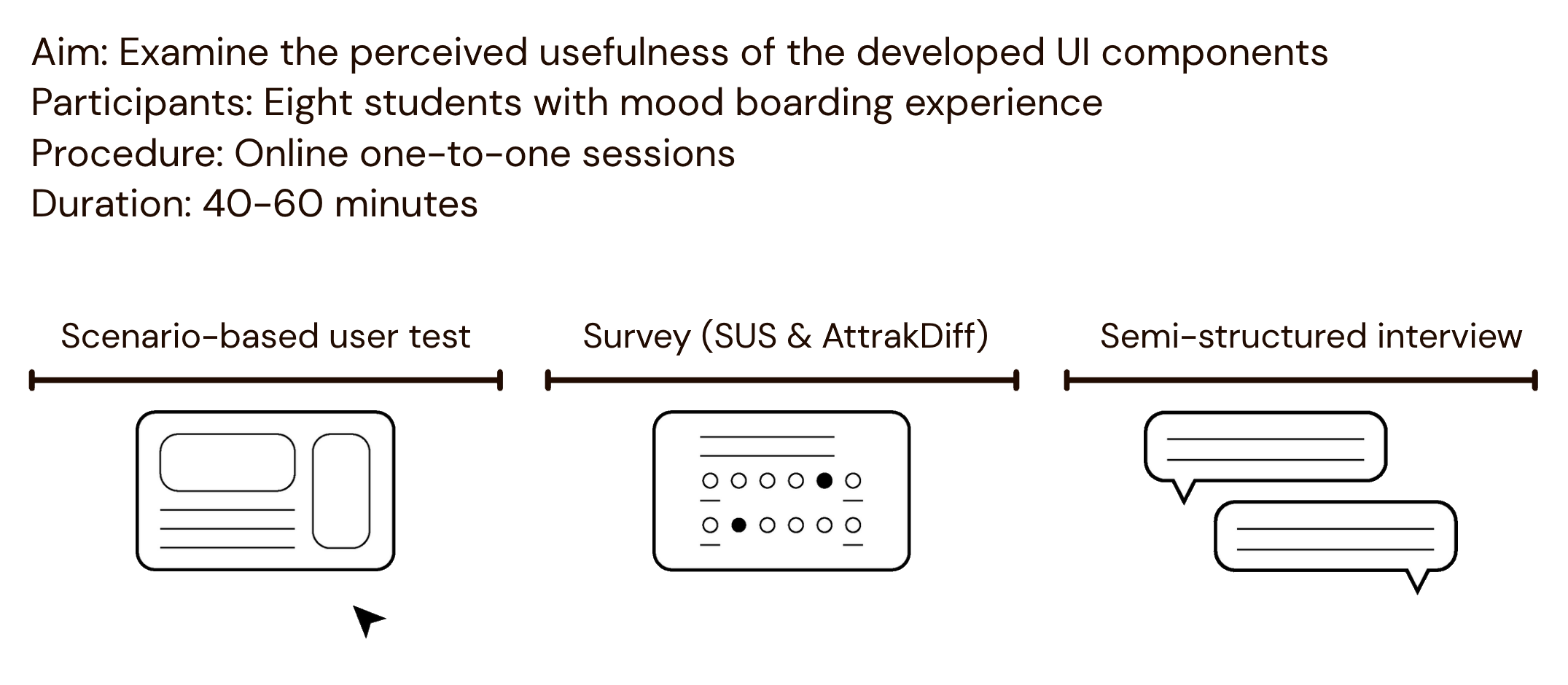

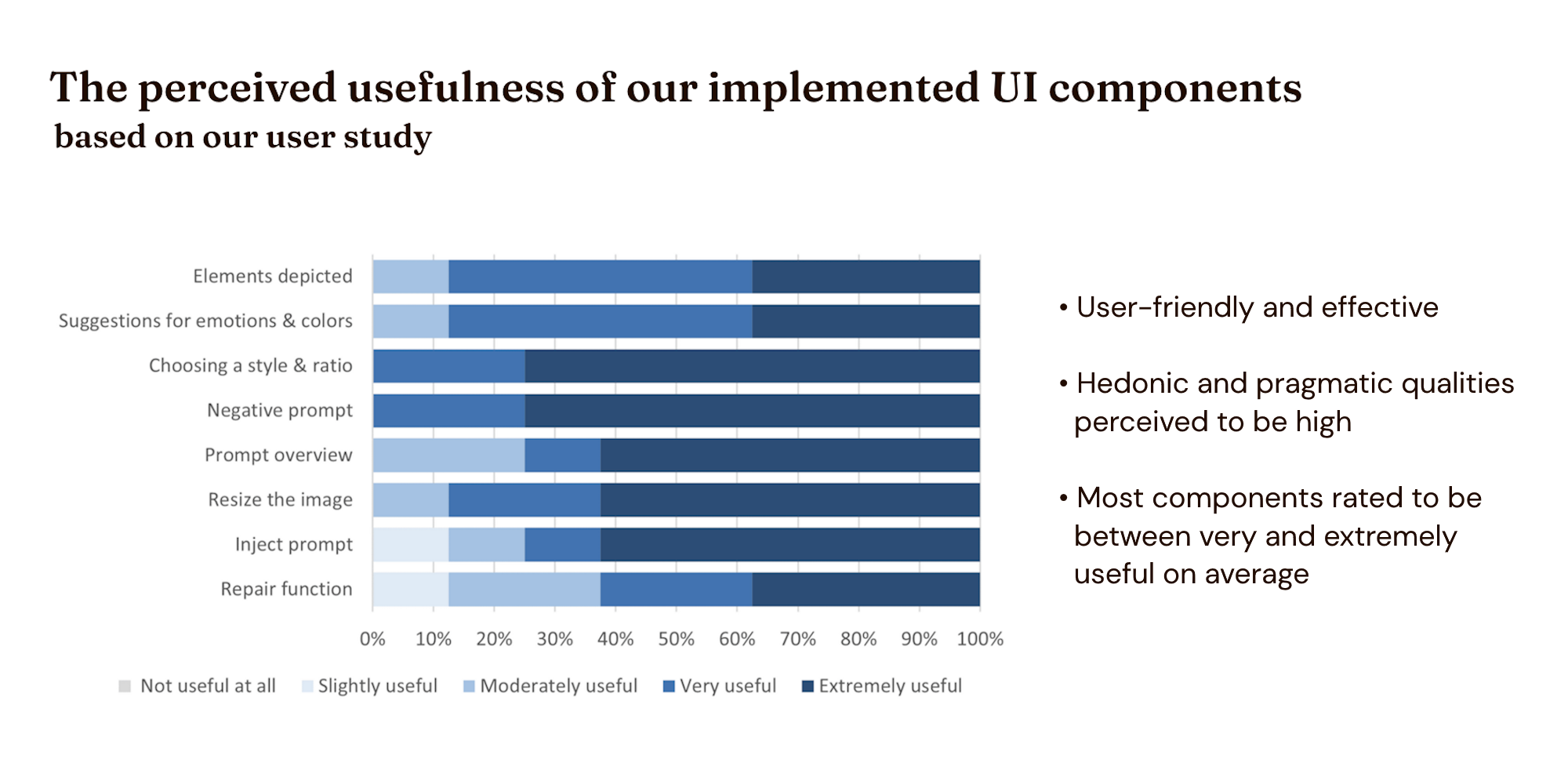

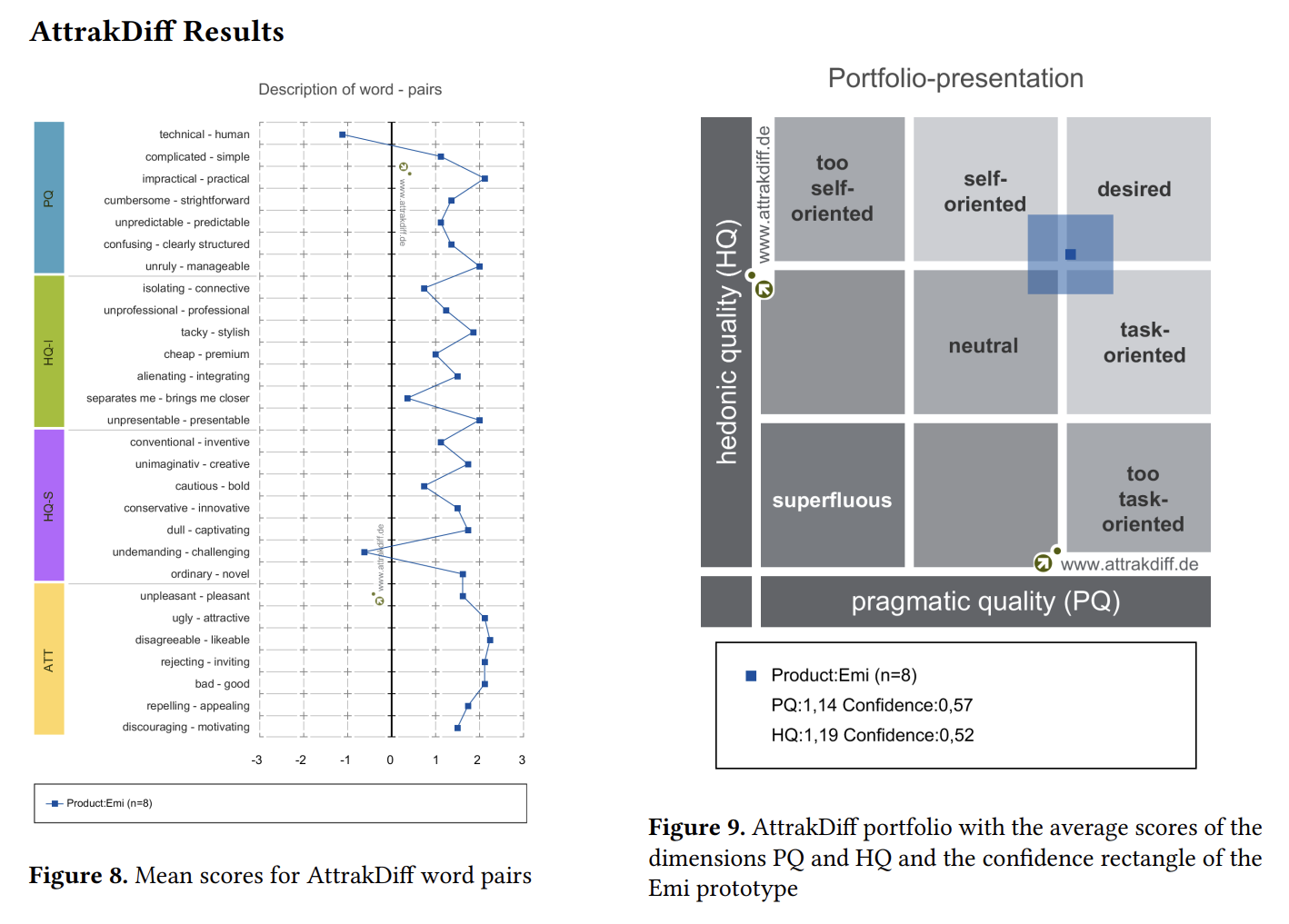

We conducted a three-stage user test to investigate the usability as well as the hedonic and pragmatic qualities of the user experience when using the Emi prototype. Moreover, we examined the perceived usefulness of each UI component when it comes to creating emotional images.

Our evaluation shows that Emi is user-friendly and effective in guiding the creation of emotional images for mood boards, and its hedonic and pragmatic qualities are perceived to be high. Emi received a System Usability Scale (SUS) score of 75.94, an AttrakDiff score of 1.14 for pragmatic quality, and an AttrakDiff average score of 1.19 for hedonic quality.

I am immensely grateful for having had the opportunity to work on such an interesting, exciting research

project with Eva-Maria and Luzie that not only deepened my understanding of human-computer interaction but

also shaped my approach to design and problem-solving. Reflecting on this, I learned a few valuable lessons:

1. Thorough research early on is essential. As generative AI had become a hot topic for

research, our group had to be well-informed of potential directions of development within this field to avoid

accidentally replicating the aims of prior research or new research that may emerge during the course of our

project. Some of our initial research questions had already been addressed by prior research, and we

fine-tuned our research questions until they were meaningfully novel by comparing existing work, drawing

inspiration from “future work” sections in other papers, and narrowing down our focus.

2. Don't overlook pilot tests. For our workshops and user tests, we had to present

information to users that sometimes may have seemed clear to us but not to them. We found it helpful to

continuously receive feedback from each other and others to ensure that users would understand us before

presenting information to them.

3. Organization pays off. Our project was documented with the collaborative software tool Miro, and clearly sectioning

different parts of the project throughout a timeline for such a huge project greatly facilitated our workflow.